Publications

1. CHI 2020 Late-Breaking Work

Masakazu Iwamura, Naoki Hirabayashi, Zheng Cheng, Kazunori

Minatani, and Koichi Kise. 2020.

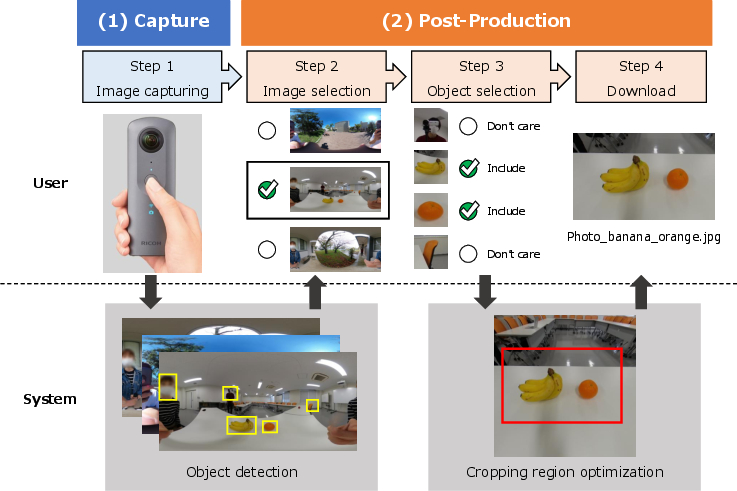

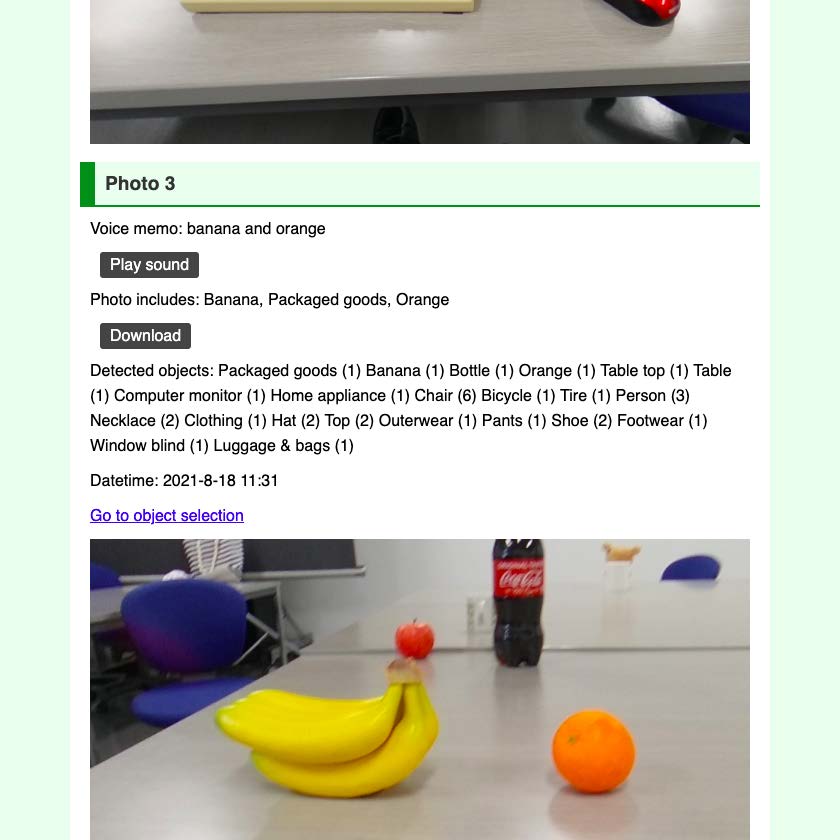

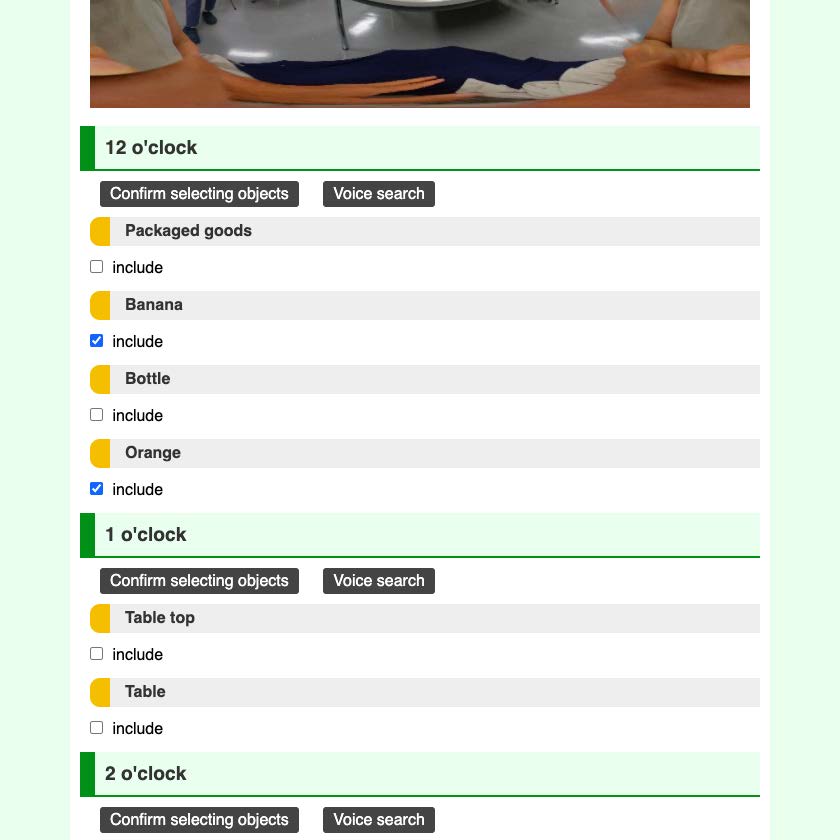

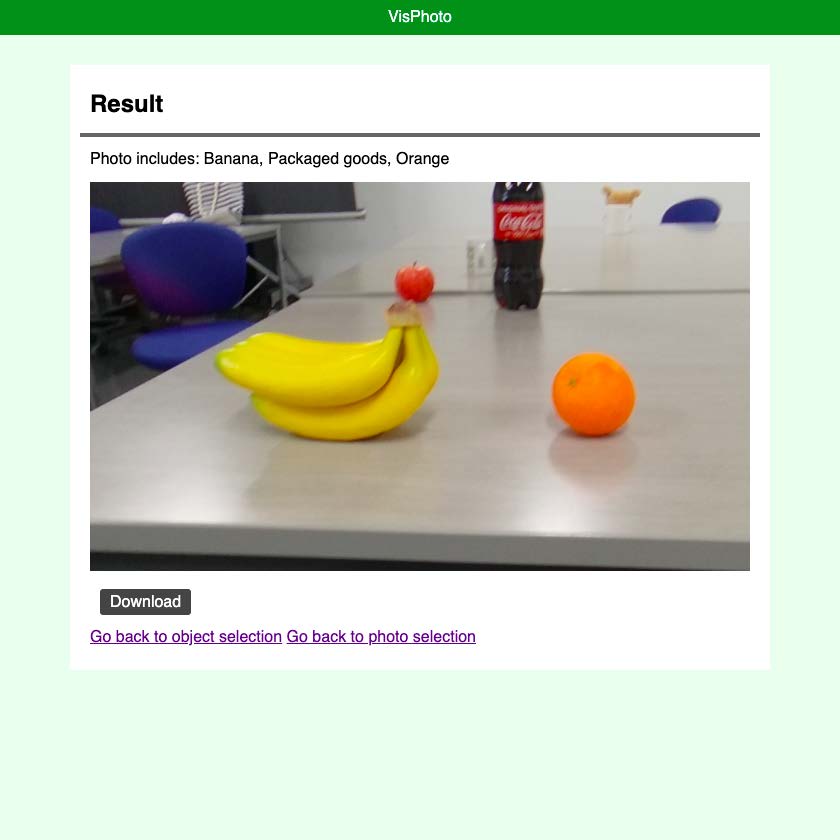

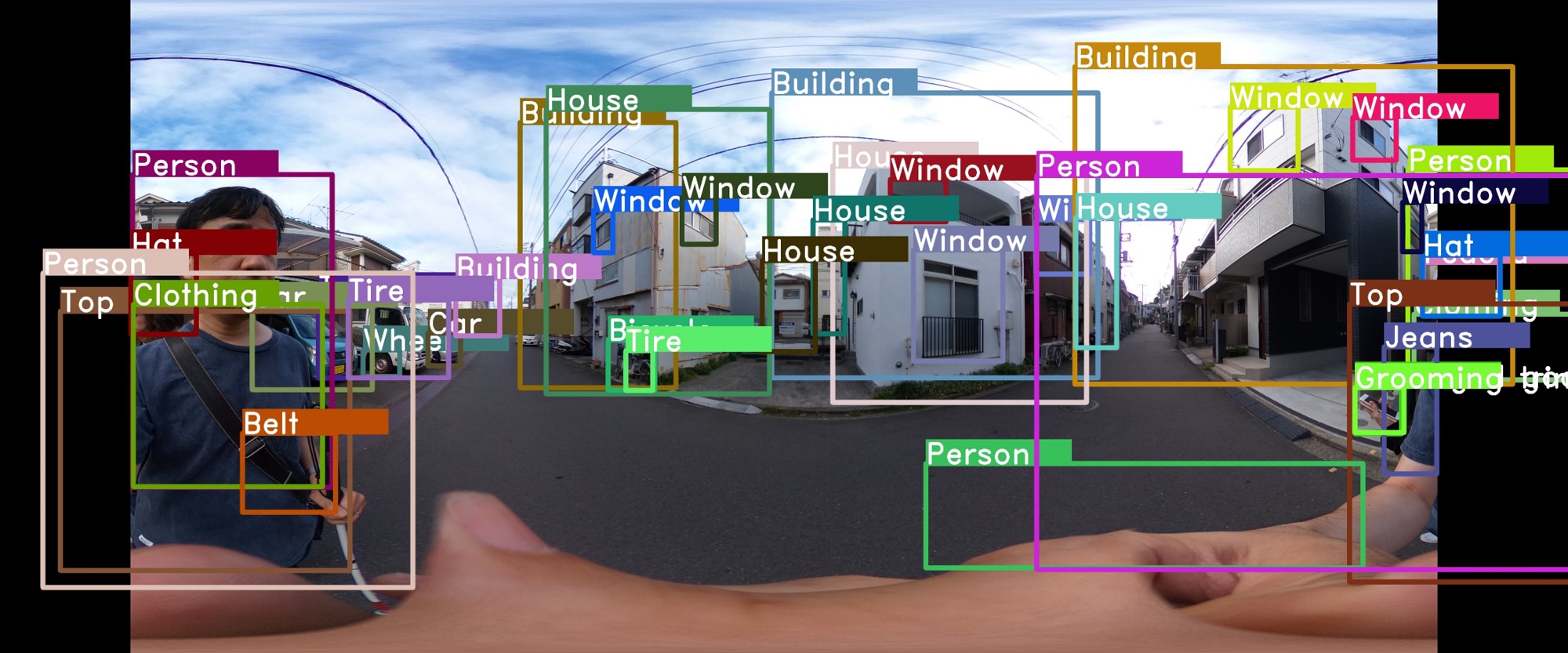

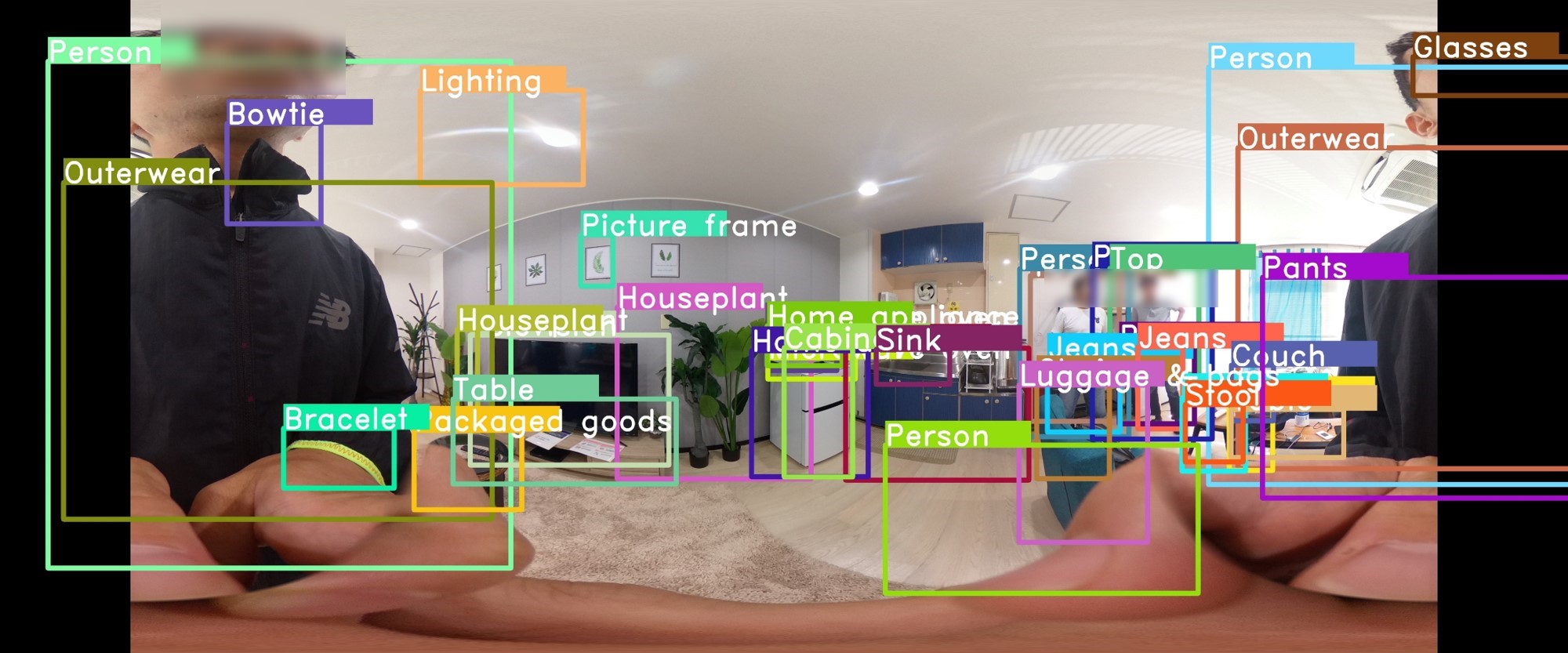

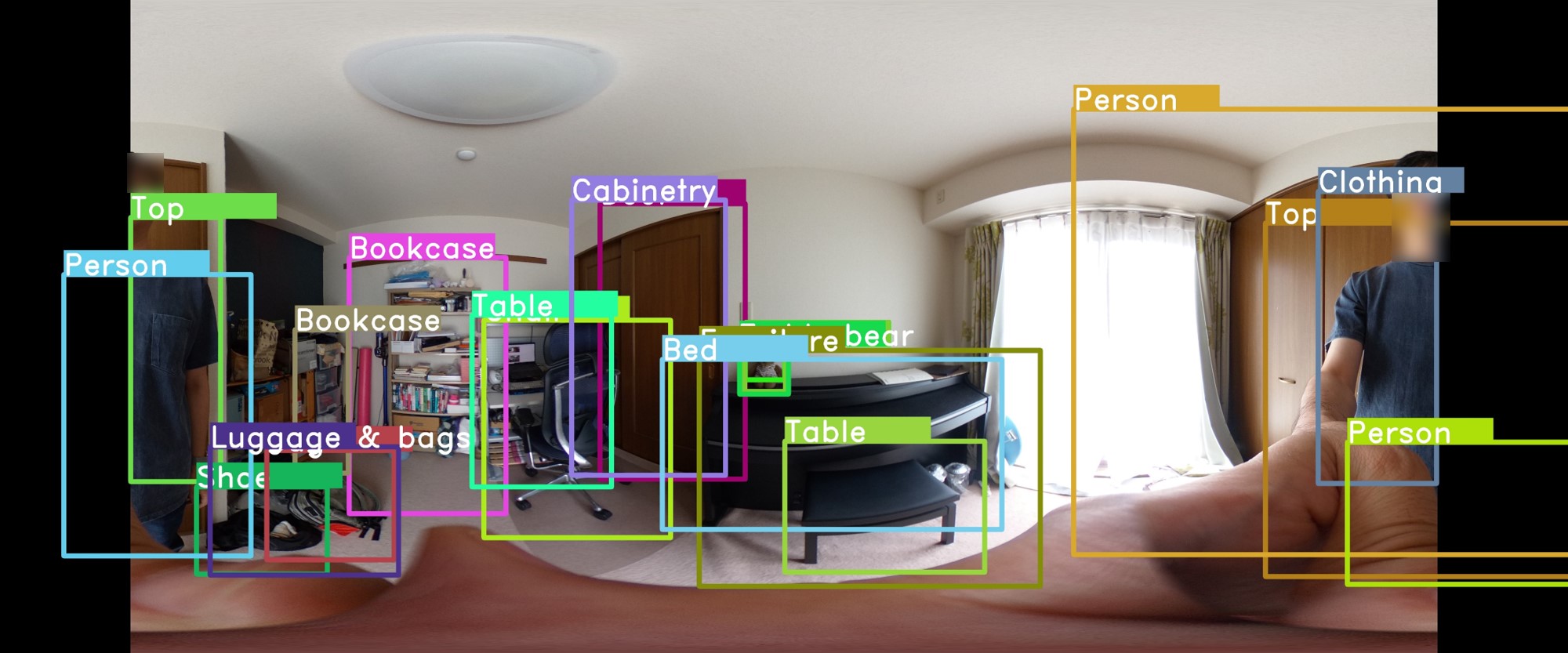

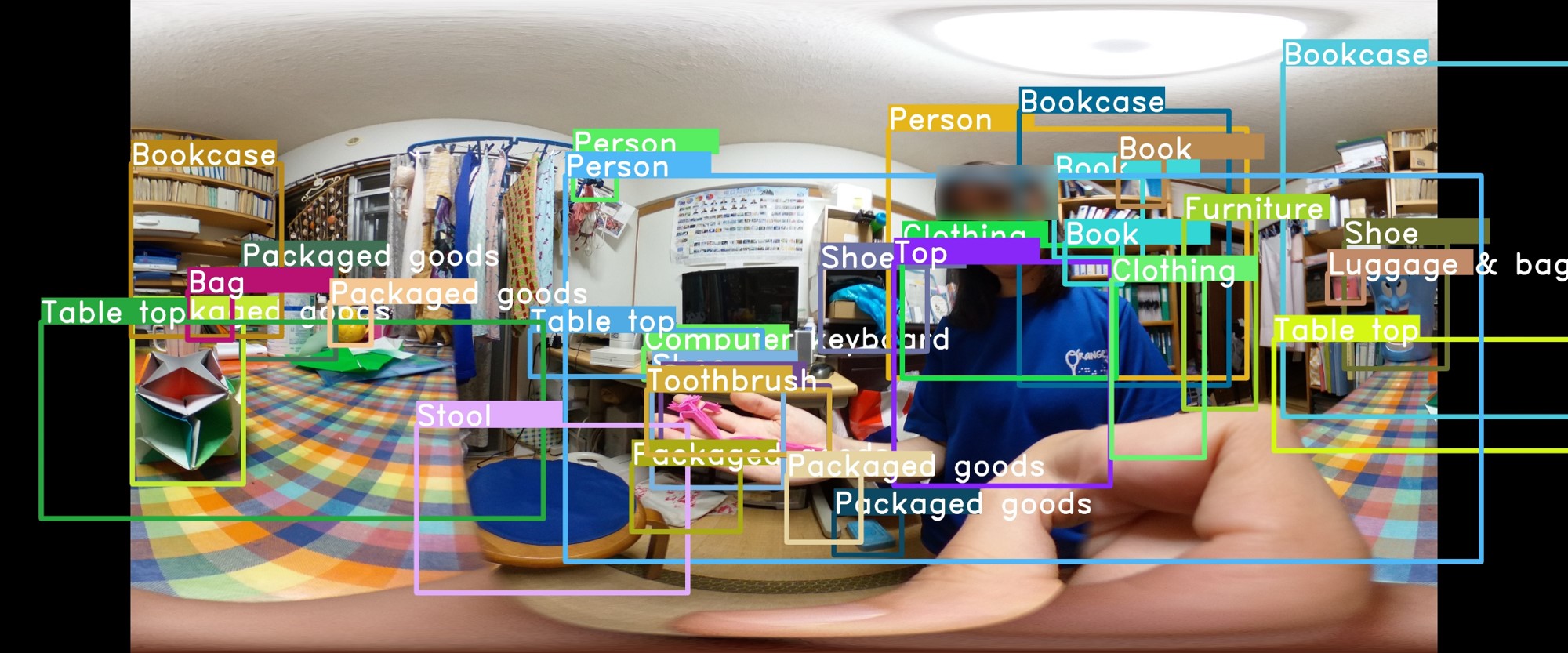

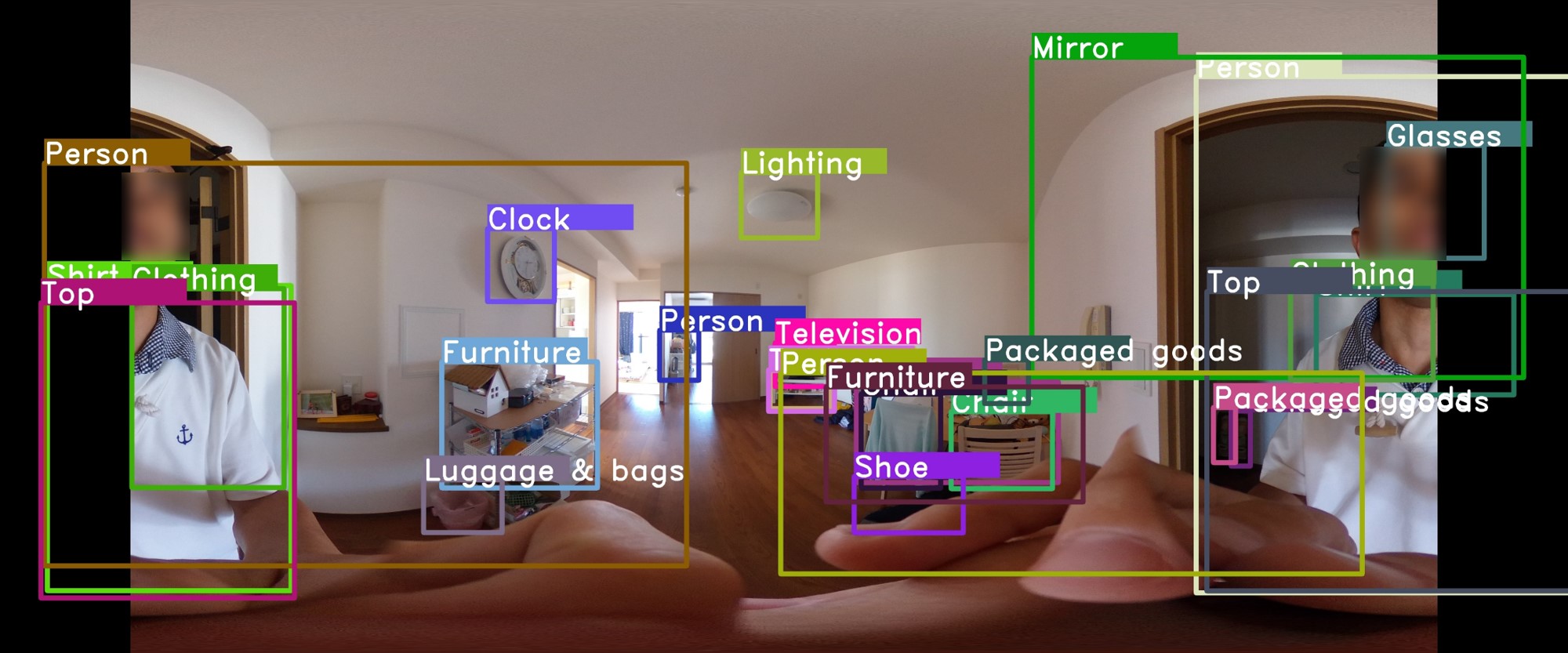

VisPhoto: Photography for People with Visual Impairment as Post-Production of Omni-Directional Camera Image.

In Extended Abstracts of the 2020 ACM CHI Conference on Human Factors in Computing Systems.

https://doi.org/10.1145/3334480.3382983

@InProceedings{Iwamura_CHI2020_LBW,

author = {Masakazu Iwamura and Naoki Hirabayashi and Zheng Cheng and Kazunori Minatani and Koichi Kise},

booktitle = {Extended Abstracts of 2020 ACM CHI Conference on Human Factors in Computing Systems},

title = {{VisPhoto}: Photography for People with Visual Impairment as Post-Production of Omni-Directional Camera Image},

doi = {10.1145/3334480.3382983},

year = {2020},

month = apr,

}

2. IEICE Transactions on Information and Systems (in Japanese)

Masakazu Iwamura, Naoki Hirabayashi, Zheng Cheng, Kazunori Minataniand, and Koichi Kise. 2021.

Photography for People with Visual Impairment by Photo-Taking with Omni-Directional Camera and Its Post-Production. J104-D, 8, 663–677. In Japanese.

A 2021 IEICE Best Paper Award Winner.

https://doi.org/10.14923/transinfj.2020JDP7069

(PDF is available at the webpage of our lab.)

@Article{Iwamura_IEICE2021ja,

author = {Masakazu Iwamura and Naoki Hirabayashi and Zheng Cheng and Kazunori Minataniand and Koichi Kise},

journaltitle = {IEICE Transactions on Information and Systems (Japanese Edition)},

title = {Photography for People with Visual Impairment by Photo-Taking with Omni-Directional Camera and Its Post-Production},

doi = {10.14923/transinfj.2020JDP7069},

volume = {J104-D},

number = {8},

pages = {663--677},

year = 2021,

month. = aug,

language = {Japanese},

}

3. Proc. ASSETS 2023

Naoki Hirabayashi, Masakazu Iwamura, Zheng Cheng, Kazunori Minataniand, and Koichi Kise. 2023.

VisPhoto: Photography for People with Visual Impairments via Post-Production of Omnidirectional Camera Imaging.

In Proceedings of the 25th International ACM SIGACCESS Conference on Computers and Accessibility.

Best Paper Award Winner at ASSETS 2023.

https://doi.org/10.1145/3597638.3608422

@InProceedings{Hirabayashi_ASSETS2023,

author = {Naoki Hirabayashi and Masakazu Iwamura and Zheng Cheng and Kazunori Minatani and Koichi Kise},

booktitle = {Proceedings of the 25th International ACM SIGACCESS Conference on Computers and Accessibility},

title = {{VisPhoto}: Photography for People with Visual Impairments via Post-Production of Omnidirectional Camera Imaging},

doi = {10.1145/3597638.3608422},

year = {2023},

month = oct,

}